Archive for the ‘Cloud’ Category

“Penny Wise and Pound Foolish” is one of my favorite lines. My top personal motto in life is, “It’s the cheap man that pays the most”. Time and time again, I have seen so many people opting to save a few mere “pennies” while paying a monumental price in the long term. Whatever it is and whatever the reason, doing due diligence and going the extra mile for good and objective research can help in making wise choices, particularly when it comes to technology. Technology changes so fast and sometimes nullifying current technologies, it is important to understand the “how” and the “why”, not just the “here” and “now”. Today, I am going to talk about a topic that has been talked about over and over again, “Data Management” with the current state of technology.

The New Data Management Landscape

Although I have been writing about this topic for many years, this blog addresses some of the new challenges most IT managers face. With the proliferation of data from IoT, Big Data, Data Warehousing, etc. in relation to data security and the dynamic implications of governance and compliance, there are far too many variables in play to effectively and efficiently “Manage” data. Implementing a business mandate can have far-reaching implications on data management. Questions like; What is the balance between storing data vs securing data? What is the cost involved going too far one way vs. the other respectively? How can I communicate to management these implications and cost factors?

False Security: The Bits and Bytes vs. ROIs and TCOs

One of the biggest challenges in IT is communicating to the people who “signs the checks” the need to spend money (in most cases, more money) for technology. The need to spend the money to effectively and successfully implement the mandates put forth by management. Unfortunately, this is not an easy task, only mastered by a few in the industry, often highly regarded professionals and living in the consulting field. Guys who are good at understanding the “Bits and Bytes” are usually illiterate at the business side of things. The business side understands the “Return of Investments” (ROI) and “Total Cost of Ownership” (TCO) language and couldn’t care less what a “Bit or Byte” is. The end result: many systems out there are poorly managed and their management having no idea about it. The disconnect is real and companies do business as usual everyday until a crisis arise.

IT managers, directors and CIOs/CTOs need to be acutely aware of the current systems and technologies at the same time remain on the cutting edge of new technologies to perform the current, day-to-day operations as well as supporting all of the new business initiatives. The companies that do well to mitigate this gap are the ones that have good IT management and good communications with the business side of the company. This is also directly related to how much is spent on IT. It is a costly infrastructure, but these are the systems that can meet the demands of management and compliance.

The IT Tightrope

Understanding current and new technologies is key to an effective data management strategy. Money spent on technology may be rendered unusable or worse, hinders the use of new technology needed to meet the demands of business. It is a constant balancing act because a solution today can be tomorrow’s problem.

Data Deduplication

Data deduplication is a mature technology and has been an effective way to tame the data beast. It is basically, in a nutshell, an algorithm that scans data for duplication. So when it sees duplication in data, it will not re-write that data, but put a metadata in its place. In other word, the metadata is basically saying, “I got this data over there, so don’t rewrite it”. This happens over the entire volume(s) and is a great way to save storage capacity. But with data security at the top of most company’s minds, data encryption is the weapon of choice today. Even if it is not, compliance mandates from governing agencies for forcing the hand to implement encryption. But how does encryption impact data management? Encryption is basically taking data and randomizing it with an encryption key. Data deduplication is made more difficult with encryption. This is a high-level generalization and there are solutions out there, but considerations must be made when making encryption decisions. Additionally, encryption adds complexity to data management. Without proper management of encryption keys can render data unusable.

Data Compression

Back in the day of DOS, Norton Utilities had a great toolbox of utilities. One of them was to compress data. I personally did not do it as it was risky at best. It wasn’t a chance I wanted to take. Beside, my data was copied to either 5.25” or 3.5” floppies. Later, Windows came in with compression on volumes. Not one to venture into that territory. I had enough challenges just to run Windows normally. I have heard and seen horror stories with compressed volumes. From unrecoverable data to sluggish and unpredictable performance. The word on the street was that it just wasn’t fully baked feature and was a “use at own risk” kinda tool. Backup software also offered compression for backups, but backups were hard enough to do without compression, adding compression to backups just wasn’t done… period.

Aside from using the PKZip application, compression had a bad rap until hardware compression. Hardware compression is basically an offload of the compression process to a dedicated embedded chipset. This was magic because there was no resource cost to the host CPU. Similar to high-end gaming video cards. These video cards are (GPU) Graphics Processing Units to offload the high-definition and extreme texture renderings at high refresh rates. Hardware compression became mainstream. Compression technology went mostly unnoticed until recently. Compression is cool again and made popular as a feature of data on SSDs. Some IT directors that I talked to drank the “Cool-Aid” on compression for SSDs. It only made sense when SSDs were small in capacity and expensive. Now that SSDs are breaking the 10TB per drive mark and cheaper per GB than spinning disk. Compression on SSDs are not so cool anymore. It’s going the way of the “mullet” hairstyle, and we all know where that went… nowhere. Adding compression to SSDs is another layer of complexity that can be removed. Better yet, don’t buy into the gimmick for compression on SSD’s, rather look at the overall merits of the system and the support of the company that is offering the storage. What good is a storage system if the company is not going to be around?

What is your data worth?

With so many breaches in security happening, seemingly every other week, it is alarming to me that we still want to use computers for anything. Some of the businesses affected by hackers I am a customer of. I have about 3 different complimentary subscriptions to fraud prevention services because of these breaches. I just read an article of a medical facility in California was hit with ransomware. With the demands of payment in the millions via bitcoin, the business went back to pen and paper to operate. What’s your data worth to you? With all of these advances in data storage management and the ever changing requirements from legal and governing agencies, an intimate knowledge of the business and data infrastructure is required to properly manage data. Not just to keep the data and to protect it from hackers but also from natural disasters.

Posted by yobitech on February 26, 2016 at 12:23 pm under Backup, Cloud, General.

Comments Off on Penny Wise and Pound Foolish.

The Internet made mass data accessibility possible. While computers were storing MBs of data locally on the internal hard drive, GB hard drives were available but priced only for the enterprise. I remember seeing a 1GB hard drive for over $1,000. Now we are throwing 32GB USB drives around like paper clips. We are now moving past 8TB, 7200 RPM drives and mass systems storing multiple PBs of data. With all this data, it is easy to be overwhelmed. Too much information is known as information overload. That is when too much information makes relevant information unusable due to the sheer amount of available information. We can’t tell usable data from unusable data.

In recent years, multi-core processing, combined with multi-socket servers has made HPC (High Performance Computing) possible. HPC or grid-computing is the linking of these highly dense compute servers (locally or geographically dispersed) into a giant super-computing system. With this type of system, the ability to compute algorithms that would traditionally take days or weeks are done in minutes. These gigantic systems laid the foundation for companies to have a smaller scaled HPC system that they would use in-house for R&D (Research and Development).

This concept of collecting data in a giant repository was first called data-mining. Data-mining is the same concept used in The Google(s) and the Yahoo(s) of the world. They pioneered this as a way to navigate the ever growing world of information available on the Internet. Google came out with an ingenious light-weight software called “Google Desktop”. It was a mini version of data-mining for the home computer. I personally think that was one of the best tools I have ever used for my computer. It was discontinued shortly thereafter for reasons I am not aware of.

The advancements in processing and compute made data-mining possible, but for many companies, it was an expensive proposition. Data-mining was limited by the transfer speeds of the data on the storage. This is where the story changes. Today, with SSD technologies shifting in pricing and density, due to better error correction and fault predictability and manufacturing advancements, storage has finally caught up.

The ability for servers to quickly access data on SSD storage to feed HPC systems, opens up many opportunities that were not possible before. This is called “Big Data”. Companies can now run Big Data to take advantage of mined data. They can now look for trends, to correlate and to analyze data quickly to make strategic business decisions to take advantage of market opportunities. For example; A telecommunications company can mine their data to look for dialing patterns that may be abnormal for their subscribers. The faster fraud can be identified, the less financial loss there will be. Another example is a retail company that may be looking to maximizing profits by stocking their shelves with “hot” ticket items. This can be achieved by analyzing sold items and trending crowd sourced data from different information outlets.

SSD drives are enabling the data-mining/Big Data world for companies that are becoming leaner and more laser-focused on strategic decisions. In turn, the total cost of these HPC systems pay for themselves in the overall savings and profitability of Big Data benefits. The opportunities are endless as Big Data has extended into the cloud. With collaboration combined with Open Source software, the end results are astounding. We are producing cures for diseases, securing financial institutions and finding inventions through innovations and trends. We are living in very exciting times.

Posted by yobitech on July 13, 2015 at 3:03 pm under Cloud, General, SSD.

Comments Off on Big Data.

Americans are fascinated by brands. Brand loyalty is big especially when “status” is tied to a brand. When I was in high school back in the 80s, my friends (and I) would work diligently to save our paychecks to buy the “Guess” jeans, “Zodiac” shoes and “Ton Sur Ton” shirts because that was the “cool” look. I put in many hours of working the stockroom at the supermarket and delivering legal documents as a messenger. In 1989, Toyota and Nissan entered into the luxury branding as well with Lexus and Infinity respectively after the success of Honda’s upscale luxury performance brand, Acura which started in 1986. Aside from the marketing of brands, how much value (aside from the status) does a premium brand bring? Would I buy a $60,000 Korean Hyundai Genesis over the comparable BMW 5 Series?

For most consumers in the Enterprise Computing space, brand loyalty was a big thing. IBM and EMC lead the way in the datacenter for many years. The motto, “You’ll never get fired for buying IBM” was the perception. As you may have heard the saying, “Perception is Reality” rang true for many CTOs and CIOs. But with the economy ever tightening and IT as an “expense” line item for businesses, brand loyalty had to take a back seat. Technology startups with innovative and disruptive products paved the way to looking beyond the brand.

I recently read an article about hard drive reliability published by a cloud storage company called BackBlaze. The company is a major player in safeguarding user data and touts over 100 petabytes of data with over 34,880 disk drives utilized. That’s a lot of drives. With that many drives in production it is quite easy to track reliability of the drives by brand and that’s exactly what they did. The article can be found in the link below.

https://www.backblaze.com/blog/hard-drive-reliability-update-september-2014/

BackBlaze had done an earlier study back in January of 2014 and this article contained updated information on the brand reliability trends. Not surprising, but the reliability data remained relatively the same. What the article did pointed out was that the Seagate 3TB drives were failing more from 9% – 15% and the Western Digital 3TB drives jumped from 4%-7%.

Company or “branding” plays a role as well (at least with hard drives). Popular brands like Seagate and Western Digital paves the way. They own the low end hard drive space and sell lots of drives. Hitachi is more expensive and sells relatively less drives than Seagate. While Seagate and Western Digital may be more popular, the hard drive manufacturing / assembly and sourcing of the parts are an important part of the process. While some hard drive manufacturers market their products to the masses, some manufacturers market their products for the niche. The product manufacturing costs and processes will vary from vendor to vendor. Some vendors may cut costs by assembling drives where labor is cheapest or some may manufacture drives in unfavorable climate conditions. These are just some factors that come into play that can reduce the MTBF (Mean Time Before Failure) rating of a drive. While brand loyalty with hard drives may lean towards Seagate and Western Digital, popularity here does not always translate into reliability. I personally like Hitachi drives more as I have had better longevity with them over Seagate, Western Digital, Maxtor, IBM and Micropolis.

I remember using Seagate RLL hard drives in the 90s and yes with failed hard drives also, but to be fair, Seagate has been around for many years and I had many success stories as well. Kudos to Seagate as they have been able to weather all these years through economic hardships and manufacturing challenges from Typhoons and parts shortages while providing affordable storage. Even with higher failure rates, failures today are easily mitigated by RAID technology and with solid backups. So it really depends on what you are looking for in a drive.

Brand loyalty is a personal thing but make sure you know what you are buying besides just a name.

Thanks to BackBlaze for the interesting and insightful study.

Posted by yobitech on October 13, 2014 at 9:11 am under Backup, Cloud, General.

Comments Off on Brand Loyalty.

The Software Defined World

Before computers were around, typically things were done using pencil and paper. Since the introduction of the computers, it revolutionized the world. From the way we did business to how we entertain ourselves. As one of the greatest inventions ever invented, ranked up there in book along with the automobile, the microwave and the air conditioner.

From a business standpoint, computers gave companies an edge. The company that leverages technology best will have the greatest competitive edge in their industry. In a similar fashion, on a personal level, the person with the newest toys and the coolest toys are the ones that can take advantage of the latest software and apps. Giving them the best efficiency in getting their jobs done while attaining bragging rights in the process.

The Computer Era has seen some milestones. I have listed some highlights below.

The PC (Personal Computers)

The mainframe was the dominant platform as computers and were mainly used in companies with huge air-conditioned rooms as they were huge machines. Mainframes were not personal. No one had the room nor money to utilize them. Aside from businesses, access to the mainframe was mainly in specialized schools, colleges, libraries and/or government agencies.

The industry was disrupted by a few entries into home computing.

TANDY TRS-80 Model 1

Tandy corporation made their TRS-80 Model 1 computer powered by the BASIC language. It had marginal success with most consumers of this computer in schools. There wasn’t really much software for it but was a great educational tool to learning a programming language

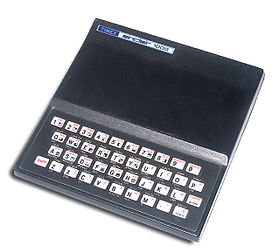

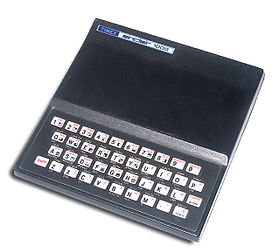

TIMEX SINCLAIR

The Timex Sinclair was another attempt but the underpowered and tactile feel keyboard device was very limited. It had a black and white screen and an audio tape player as the storage device. There was more software available for it, but it never took off.

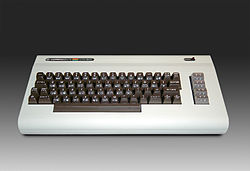

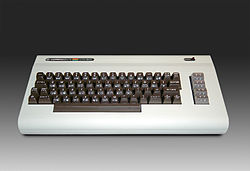

COMMODORE VIC 20/ COMMODORE 64

The Commodore Vic 20 and Commodore 64 was a different kind of computer. It had software titles available along with the release of the computer. It was in color and offered “sprite” graphics that allowed for detailed, smooth animations in color. The computer was a hit as it also offered an affordable floppy disk drive (5.25”) as the storage device.

APPLE AND IBM

Apple and IBM paved the way into the homes not just because they had a better piece of hardware, but there was access to software such as word processing, spreadsheets and databases (and not just games). This was the entry of the Personal Computer. There were differences between Apple and IBM where IBM was not user friendly and largely text based while Apple took a graphical route offering a mouse and menu driven Operating System that made the computer “friendly”.

Commoditization for Progress

Now that the home computer age has begun, the commoditizing of that industry also started shortly there after. With vendors like Gateway, Lenovo, HP and Dell, making these computers became cheap and plentiful. With computers being so affordable and plentiful, HPC (High-Performance Computing) or “grid” computing has been made possible. HPC/Grid Computing is basically the use of 2 or more computers in a logical group to share resources to act as one large computing platform. Trading firms, Hedge Funds, Geological Study and Genetic/Bio Research companies are just some places that use HPC/Grid Computing. The WLCG is a global project that collaborates more than 170 computing centers in 40 countries to provide resources to store, distribute and analyze over 30 petabytes of data generated by the Large Hadron Collider (LHC) at CERN on the Franco-Swiss border. As you can see, commoditization enables new services and sometimes “disruptive” technologies (ie. HPC/Grid). Let’s take a look at other disruptive developments…

The Virtual Reality Age

With PCs and home computers entering the home, the world of “Virtual Reality” was the next wave. Multimedia capable computers made it possible to dream. Fictional movies like Tron and The Matrix gave us a glimpse into the world of virtual reality. Although virtual reality has had limited success over the years, it wasn’t a disruptive technology until recently. With 3D movies making a comeback, 3D TVs widely available and glass cameras, virtual reality is more HD and is still being define and redefined today.

The Internet

No need to go into extensive details about the Internet. We all know this is a major disruption in technology because we all don’t know what to do with ourselves when it is down. I’ll just recap the inception of the Internet. Started as a government / military application (mostly text based) for many years, the adoption of the Internet for public and consumer use was established in the early 90s. The demand for computers with better processing and better graphics capabilities were pushed further as the World Wide Web and gaming applications became more popular. With better processing came better gaming and better web technologies. Java, Flash and 3D rendering made on-line gaming possible such as Call of Duty and Battlefield.

BYOD (Bring Your Own Device)

This is the latest disruptive trend is BYOD. As the lines between work and personal computing devices are being blurred. Most people (not me) prefer to carry one device. As the mobile phone market revolutionized 3 industries (Internet, phone, music device) it was only natural that the smart phone would be the device of choice for us. With the integration of high-definition cameras into the phones, we are finding less and less reason to carry a separate device to just take pictures with or a separate device for anything. There is a saying today, “There is an App for that”. With the commoditizing of cameras as well, Nokia has a phone with a 41 megapixel camera built in. With all that power in the camera, other challenges arise like bandwidth and storage to keep and share such huge pictures and videos.

The Software Defined Generation

There is a new trend that is finally going to take off but has been around for a while. This disruptive technology is called Software Defined “X”. The “X” being whatever the industry is. One example of Software Defined “X” is 3D printing. It was science fiction at one time to be able to just think up something and bring it into reality, but now you can simply defining it in software (CAD/CAM) and the printer will make it. What makes 3D printing possible is the cost of the printer and the materials used for printing due to commoditization. It wasn’t because we lacked the technology to make this earlier, it was just cost prohibitive. Affordability has brought 3D printing into our homes.

Software Defined Storage

Let’s take a look at Software Defined Storage. Storage first started out as a floppy disk or a hard drive. Then it evolved into a logical storage device consisting of multiple drives bound together in a RAID set for data protection. This concept of RAID was then scaled into SANs today that store most of our business critical information. This concept RAID has been commoditized today and is now a building block for Software Defined Storage. Software Defined Storage is not a new concept, just was not cost effective. Since the cost of high-performance networking and processing becoming affordable, Software Defined Storage is now a reality.

Software Defined Storage technology is taking the RAID concept and virtualizing small blocks of storage nodes (appliances-mini SANs) and grouping them together as a logical SAN. Because this Software Defined Storage is made up of many small components, these components can be anywhere in the architecture (including the cloud). As networking moves into 10Gb and 40Gb speeds and Fiber Channel at 16Gb speeds and WAN (Wide Area Networks) at LAN speeds, processors entering into the 12+ cores (per physical processor) and memory that can process at sub-millisecond speeds Software Defined Storage can virtually be anywhere. It can also be stretched over a campus or even between cities or countries.

In the world of commoditizing everything, the “Software Defined” era here.

Posted by yobitech on September 23, 2014 at 11:48 am under Cloud, General.

Comments Off on The Software Defined World.

Being in the IT industry for over 20 years, I have worn many hats in my days. It isn’t very often that people actually know what I do. They just know I do something with computers. So by default, I have become my family’s (extended family included) support person for anything that runs on batteries or plugs into an outlet. In case you don’t know, I am a data protection expert and often not troubleshooting or setting up servers anymore. In fact, I spend most of my days visiting people and making blueprints with Microsoft Visio. I have consulted, validated and designed data protection strategies and disaster recovery plans for international companies, major banks, government, military and private sector entities.

For those who ARE familiar with my occupation often ask me, “So what does a data protection expert do to protect his personal data?” Since I help companies protect petabytes of data, I should have my own data protected also. I am probably a few professionals that actually do protect data to the extreme. Sometimes a challenge also because I have to find a balance between cost and realistic goals. It is always easier to spend other people’s money to protect their data. There’s an old saying that, “A shoemaker’s son has no shoes”. There is some truth in that. I know some people in my field that have lost their own data while being paid to protect others.

Now welcome to my world. Here is what I do to protect my data.

1. Backup, Backup and Backup – Make sure you backup! And often. Doing daily backups are too tedious, even for a paranoid guy like me. It is unrealistic also. Doing weekly or bi-weekly is perfectly sufficient. But there are other things that needs to be done as well.

2. External Drives – External drive backups are not only essential, but they are the only way we can survive as keeping pics and home videos on your laptop or desktop is not realistic. Backing up to a single external drive is NOT recommended. That is a single point of failure as that drive can fail with no other backups around. I use a dual (RAID1) external drive. It is an external drive that writes to 2 separate drives at the same time. There is always 2 copies at all times. I also have a 2 other copies on 2 separate USB drives. You should avoid don’t slam the door drives as they add an additional layer of complexity. When they fail, they fail miserably. Often the NAS piece is not recoverable and the data is stranded on the drives. At that time, data recovery specialist may have to be leverage to recover the data. This can cost thousands of dollars.

3. Cloud Backup – There are many different cloud services out there and most of them are great. I use one that has no limit to backing up to the cloud. So all of my files are backed up to the cloud whenever the my external drives are loaded with new data without limits.

4. Cloud Storage – Cloud storage is different from cloud backup as this service runs on the computers that I use. Whenever I add file(s) on my hard drive, it is instantly replicated to the cloud service. I use Dropbox at home and Microsoft SkyDrive for work, as it is saved in the cloud as well as all my computers. I also have access to my files via my smartphone or tablet. In a pinch, I can get to my files if I can get to an Internet browser. This feature has saved me on a few occasions.

5. Physical Off-Site Backup – I backup one more time on an external hard drive that I copy my files onto once a year. That drive goes to my brother-in-law’s house. You can also utilize a safety deposit box for that as well. This is in case there is a flood or my house burns down, I have a physical copy off-site.

Data is irreplaceable and should be treated as such. My personal backup plan may sound a bit extreme, but I can sleep well at night. You don’t have to follow my plan but a variation of this plan will indeed enhance what you are already doing.

Posted by yobitech on July 17, 2014 at 8:09 am under Backup, Cloud, General.

Comments Off on Protecting Your Personal Data.

Technology changes so fast that if I was ever sequestered for a court case for more than 1 year, I would have to change careers or start over. There are so many changes that happen on a daily basis that it is mind boggling. I have seen professionals come and go and only a few have remained in the tech industry. It is those that have the keen ability to be one step ahead to reinvent themselves are the ones that are thriving. I personally love the fast-paced, ever changing field; and I use it to my advantage. It keeps me fresh and set apart from my competition. Most would see this as a negative aspect to their career, but I see it as an opportunity.

I recently wrote about “Cannibalization” and the willingness for companies to engage purposely in it. It is a necessary evil to survive in this industry. As invincible as Microsoft may have seem to be in the early 2000s, they are showing signs of a big company that have lost their agility. They are getting hit from all fronts… the Open Source community, Apple, Android, Google Chrome, Amazon are just a few names. Getting slapped around the past 6-8 years, Microsoft has become a company with an identity crisis. Just look at their latest products… Windows 8 and Windows Server 2012. What is it???? Are they a business solutions company or are they a consumer products company? Is it a PC or a tablet? Is it a server or a desktop? This should be interesting where Microsoft will go. Will they be able to reinvent themselves or go the way of the typewriter?

There are a few game-changers that come along in technology that disrupts “life” as we know it. Where we are today, there is a disruptive technology called the “Cloud”. The “Cloud” sounds dark, but it is a shining star. It is a game-changer, a breath of fresh air in this economy. The “Cloud” is really nothing more that remote computing. It is the details of what they each cloud company offers is what sets them apart. Remote computing or telecommuting is the earliest form of cloud computing. In the mid 80s there was “PC Anywhere” and dial-in using RAS (Remote Access Servers) servers. Then came dedicated remote sites usually hooked up via dedicated T1s and ISDN lines. Then came the Internet… Al Gore changed everything. This opened up many more possibilities with IP tunneling and encryption. With mobile and tablet computing, BYOD (Bring Your Own Device) is the preferred MO (Modus Operandi or Method of Operation) for end user computing. Cloud technology has been around for a while, but it just never really caught on. The skies just dark with some serious Cloud offerings. Driven by a tight economy, it is all about the bottom line. Companies that once thought about buying servers and building out data centers are now looking at paying a monthly subscription for the same computing resources but without the burden of managing it. What does this mean for the traditional IT guy with tons of certifications and experience? They will find themselves outmatched and outwitted if they don’t reinvent themselves. For those looking to get into the tech industry, this is good news. The cloud is a great entry point as it is starting to take off. IT is revolving door. There are just as many experienced folks leaving (voluntary and involuntary) as those who are getting into it. So if you are a veteran like me or a newbie trying to get in, this is a great time to do so. The future looks cloudy and it is good.

Posted by yobitech on January 15, 2013 at 2:38 pm under Cloud, General.

Comments Off on The Future is Cloudy.

I remembered when I got my first computer. It was a Timex Sinclair.

Yes, the watch company and they made a computer. It had about 2k of RAM with a flimsy cellular buttoned keyboard. It had no hard drive or floppy disks, but it had a cassette tape player as the storage device. That’s right, the good old cassette tape. I bought a game on cassette tape with the computer back in the 80s and it took about 15 minutes to load the game. It was painful, but worth it.

From there, I got a Commodore Vic20 with a 5.25” floppy drive. Wow, this was fast! From cassette to floppy disk seems like years ahead. I even used a hole-puncher to notch the side of the disk so I can use the flip side of the floppy. We didn’t have that many choices back then. Most games and applications loaded and ran right off of removable media.

I remember when hard drives first came out. It was very expensive and out of reach of the masses. A few years later, I saved up enough money to buy a 200MB hard drive by Western Digital. I paid $400 for that hard drive. It was worth every penny.

Since then, I was always impressed by how manufacturers were able to get more density on each disk every 6 months. Now with 3TB drives coming out soon, it never ceases to amaze me.

It is interesting how storage is going in 2 directions, Solid State Disk (SSD) and Magnetic Hard Disk (HD). If SSD is the next generation, then what does that mean for the HD? Will the HD disappear? Not for a while… This is because there are 2 factors: Cost and Application.

At the present time, a 120GB SSD is about the same cost as a 1TB drive. So it makes sense to go with a 1TB drive. Although this may be true from a capacity standpoint, the Application ultimately dictates what drive(s) are used to store the data. For example, although a 1TB drive may be able to store video for a video editor, the drive may be too slow for data to be processed. Same goes with databases where response time and latency is critical to the application.

Capacity simply will not cut it. This is when using multiple disks in a RAID (Redundant Array of Independent Disks) set will provide performance as well as capacity. SSDs can also be used because the access and response time of these drives are inherently faster.

As long as there is a price to capacity gap, the HD will be around during that time. So architecting storage today is a complex art for this dynamic world of applications.

I hope to use this blog as a forum to shed light on storage and where the industry is going.

Posted by yobitech on April 18, 2011 at 2:16 pm under Cloud, General, RAID, SAS, SCSI, SSD.

Comment on this post.

|