Archive for the ‘SCSI’ Category

If you have been keeping up with the storage market lately, you will notice that there has been a considerable drop in prices for SSD hard drives. It has been frustrating to see over the past 4 to 5 years there has not been much changes in the SSD capacities and prices until now. With the TLC (Triple Level Cell) SSD hard drives available, it is the game-changer we have been waiting for. With TLC capacities at almost 3TBs per SSD drive and projected to approach 10TBs per drive in another year, what does that mean for the rotational disk?

That’s a good question, but there is no hard answer to that yet. As you know, in the technology industry, it can change on a dime. The TLC drive market is the answer to the evolution of hard drives as a whole. It is economical because of the high capacity and it is energy efficient as there are no moving parts. Finally, the MTBF (Mean Time Before Failure) is pretty good as SSD reliability was a factor in the actual adoption of SSDs in the enterprise.

MTBF

The MTBF is always a scary thing as that is the life expectancy of a hard drive. If you recall, I blogged some time ago about the “Perfect Storm” effect where hard drives in a SAN were deployed in series and manufactured in batches at the same time. So it is not uncommon to see multiple drive failures in a SAN that can result in data loss. With rotational disk at 8TBs per 7.2k drive, it can conceivably take days, to even weeks to rebuild a single drive. So I think for rotational disk that is a big risk to take. With TLC SSDs around 10TBs, it is not only a cost and power efficiency advantage, but it is also a lower risk when it comes to RAID rebuilding time. Rebuilding a 10TB drive can take a day or 2 (sometimes hours depending on how much data is on the drive). The MTBF rate is higher because SSDs are predictively failed by logically marking active cells, dead (barring other failures). This is the normal wear and tear process of the drive. Cells have a limited number of writes and re-writes before they are marked dead. In smaller capacities, the rate of writes per cells are much higher because there is only a limited number of cells. With the large amount of cells now offered in TLC SSDs, essentially, cells are written to less often as a much smaller drive inherently. So by moving to a larger capacity increases the durability of the drive. This is the reverse for rotational drives as they become more unreliable as capacity increases.

So what does it mean for the rotational disk?

Here are some trends that are happening and where I think it will go.

1. 15k drives will still be available but in limited capacities. This is because of legacy support. Most drives out there are still running 15k drives. There are even 146Gb drives out there that are running strong but will need additional capacities due to growth and/or replacement for failed drives. This will be a staple in the rotational market for a while.

2. SSDs are not the answer for everything. Although we all may think so, SSDs are actually not made for all workloads and applications. SSD perform miserably when it comes to streaming videos, large block and sequential data flows. This is why the 7.2k, high capacity drives will still thrive and be around for a while.

3. The death of the 10k drive. Yes, I am calling it. I believe that the 10k drive will finally rest in peace. There is no need for it nor will there be a demand for it. So 10k drives, so long…

Like anything in technology, this is speculation, from an educated and experienced point of view. This can all change at anytime, but I hope this was insightful.

Posted by yobitech on October 8, 2015 at 1:42 pm under General, SCSI, SSD.

Comments Off on The End of the Rotational Disk? The Next Chapter.

It is human nature to assume that if it looks like a duck, quacks like a duck and sounds like a duck then it must be a duck. The same could be said about hard drives. They only come in 2.5” and 3.5” form factors, but when we dig deeper, there are distinct difference and developments in the storage industry that will define and shape the future of storage.

The Rotating Disk or Spinning Disk

So there were many claims in the 90’s of how the “mainframe is dead”, but the reality is, the mainframe is alive and well. In fact, there are many corporations still running on mainframes and have no plans to move off of it. This is because there are many other factors that may not be apparent on the surface, but it is reason enough to continue with the technology because it provides a “means to an end”.

Another claim was in the mid 2000’s that “tape is dead”, but again, the reality is, tape is very much alive and kicking. Although there have been many advances in disk and tape alternatives, tape IS the final line of defense in data recovery. Although it is slow, cumbersome and expensive, it is also a “means to an end” for most companies that can’t afford to lose ANY data.

When it comes to rotating or spinning disk, many are rooting for the disappearance of them. Some will even say that is going the way of floppy disk, but just when you think there isn’t any more that can be developed for the spinning disk, there are some amazing new developments. The latest is…

The 6TB Helium Filled hard drive from HGST (a Western Digital Company).

Yes, this is no joke. It is a, hermetically sealed, water proof, hard drive packed with more platters (7 platters) to run faster and more efficiently that the conventional spinning hard drive. Once again, injecting new life into the spinning disk industry.

What is fueling this kind of innovation into a supposedly “dying” technology? For one, solid state drives or SSDs are STILL relatively expensive. The cost has not dropped (as much as I would have hoped) like most traditional electronic components thus keeping the spinning disk breed alive. The million dollar question is, “How long will it be around?” It is hard to say because when we look deeper into the drives, there are differences. They are also fulfilling that “means to an end” purpose for most. Here are some differences…

1. Capacity

As long as there are ways to keep increasing capacity and keep the delta between SSDs and spinning disk far enough, it will dilute the appetite for SSDs. This will trump the affordability factor because it is about value or “cost per gigabyte”. We are now up to 6TBs in a 3.5” form factor while SSDs are around 500GBs. This is the single most hindering factor for SSD adoption.

2. Applications

Most applications do not have a need for high performance storage. Most storage for home users are for digital pictures, home movies and static PDF files and documents. Most of these files are perfectly fine for the large 7.2k multi-terabyte drives. In the business world or enterprise, it is actually quite similar. Most companies’ data is somewhat static. In fact, on average, about 70% of all data is hardly ever touched again once it is written. I have personally seen some customers with 90% of their data being static after being written to for the first time. Storage vendors have been offering storage tiering (Dell Equallogic, Compellent, HP 3Par) that automates the movement of storage based on their usage characteristics without any user intervention. With this type of virtualized storage management maximizes the ROI (Return on Investment) and the TCO (Total Cost of Ownership) for spinning disk in the enterprise. This has extended the existence of spinning disk as it maximizes the performance characteristics of both spinning disk and SSDs.

3. Mean Time Before Failure (MTBF)

All drives have a MTBF rating. I don’t know how vendors come up with these numbers, but they do. It is a rating of how long the device is expected to be in service before they fail. I wrote in a past blog called “The Perfect Storm” where SATA drives would fail in bunches because of the MTBF. Many of these drives are put into service in massive amounts at the same time doing virtually the same thing all of the time. MTBF is theoretical number but depending on how they are used, “mileage will vary”. MTBF for these drives are so highly rated that most of them that run for a few years will continue to run for many more. In general, if a drive is defective, it will fail fairly soon into the operational stage. That is why there is a “burn-in” time for drives. I personally run them for a week before I put them into production. Those drives that last for years eventually make it back on the resale market only to run reliably for many more. On the other hand, MTBF for an SSD is different. Although they are rated for a specific time like the spinning disk, the characteristics of an SSD is different. There is a process called “cell amplification” where the cells in an SSD will actually degrade. They will eventually be rendered unusable but there is software that will compensate for that. So as compared to a spinning disk where there is no cell amplification, SSDs are measurably predictable to when they will fail. This is a good and bad thing. Good in the aspect of predicting failure but bad in the sense of reusability. If you can measure the life of a drive, this will directly affect the value of the drive.

In the near future, it is safe to say that the spinning disk is going to be around for a while. Even if the cost of SSDs come down, there are other factors that meet the needs for the users of storage. The same way that other factors that have kept the mainframe and tape technologies around the spinning disk is has earned its place.

Long live the spinning hard drive!

Posted by yobitech on November 5, 2013 at 11:06 am under Backup, General, SCSI, SSD.

Comments Off on Where is the Storage Industry Going?.

If you are like me, I like to have the latest and greatest technology. From iPads to smart phones, I just love this stuff. If I am honest with myself, I can truly say I really don’t NEED all this stuff. It is just nice having…

Like many others, (mostly guys) we are similar in this way. We look at data storage similarly. We like to own the fastest drives, the smartest arrays and best-in-class technologies. In today’s tough economy, companies are looking for their IT department to do more with the technology. Leverage existing equipment and investments and to buy only if it gives them a competitive advantage. Many companies are holding off from making purchases and when they do make the investment, it is typical for them to stretch their 3 years maintenance contracts to 5 years.

Let’s face it, data is exploding and to store it is just darn expensive! The upfront costs can be very high. The golden question to answer is do I really need the latest and greatest? In a world if money was not a factor, I would have SSDs in all my devices and in my SAN, but unfortunately cost is a factor. SSDs are used mainly for very specific purposes like extending cache in a SAN or used for latency sensitive applications such as databases and virtual desktop systems. The other 95% of applications are sufficient using rotating media.

SAS, FC, SATA, PATA, SCSI hard drives

With the evolution of disk drives, it can be confusing. Especially if you are new to the industry. We hear a lot about SAS drives these days, but what does it mean? What is the difference between SAS, FC, SATA and SCSI hard drives? Mechanically, not a lot, with the exception of bearings and electronics that affect the MTBF (Mean Time Before Failure), it is mostly the drive interface.

Below are the interface types that are most common on today’s hard drives.

SAS – Serial Attached SCSI: The replacement for SATA and FC. Based on the SCSi command set, SAS is capable of 4x6Gb or 4x3Gb channels of I/O simultaneously.

FC – Fibre Channel: Commonly used in SAN systems as high-end, enterprise class hard drives.

SATA – Serial Advanced Technology Attachment: A commonly used interface in consumer grade hard drives. This was a replacement for ATA (AT Attachment, aka EIDE) and PATA (Parallel AT Attachment) drives.

SCSI – Small Computer Systems Interface: is a set of standards for peripheral connection to computer systems. This is the most common type among all business-class hard drives.

The bottom line is, there are alternatives. SATA and SCSI when architected correctly can support a major part of your data. So before you go out and make a considerable investment, ask the question, “Do I need the latest and greatest?”

Posted by yobitech on May 23, 2011 at 3:44 pm under SAS, SCSI, SSD.

Comment on this post.

People often ask me the question, ”What’s the difference between a Seagate 1TB 7.2k drive and a Western Digital 1TB 7.2k drive?” and I usually say the manufacturer…

Other than some differences in the mechanism, electronics and caching algorithms, generally, there is not much that is different between hard drives.

Hard drives are essentially physical blocks of storage with a designated capacity, set rotational speed and a connection interface (SCSI, FC, SAS, IDE, SATA, etc…). Hard drive performance is usually measured in IOPS (Input/Output Per Second) for each hard drive. For example, a 15k RPM drive will yield about 175 IOPS per drive, while a 10k RPM drive will yield about 125 IOPS per drive.

In the business setting, most companies store their information on a SAN (Storage Area Network). A SAN is also known as an intelligent storage array. An intelligent storage array is commonly made up of 1 or more controllers (aka, Storage Processors or SP) controlling groups of disk drives. In the SAN, there is intelligence. They are in the controllers (SPs).

This “Intelligence” is the secret sauce for each storage vendor. EMC, NetApp, HP, IBM, Dell, etc. are examples of SAN vendors and each will vary on the intelligence and capabilities in their controllers. Without this intelligence, these groups of disks are known as JBOD (Just a Bunch of Disks). I know, I know, I don’t make these acronyms up.

Disks that are organized in a SAN work together collectively to yield the IOPS necessary to produce the backend performance to provide the service levels driven by the application. For example, an application may demand 500 IOPS on average, how many disk drives do I need to adequately service this application? (This is an over simplified example for a sizing exercise, for there are many factors that come into play when it comes to sizing, for example, RAID type, read/write ratios, connection types, etc.) With each hard drive producing a set of set IOPS, is it possible to “squeeze” more performance out of the same set of hard drives? The answer is yes.

Remember the Merry-Go-Round? Why is it always more fun on the outside that the inside of the ride? We all knew as kids that we always want to be on the outside of the ride screaming because things seem to be faster. Not that the ride spun any faster, but it was because the farther out you were from the center, the more distance is covered in each revolution.

The same theory is true with hard drives. The outer tracks of the hard drive will always yield more data per revolution than the inside of the hard drive. More data means more performance. By utilizing the outer tracks of a hard drive to yield better performance is a technique known as “short stroking” the disk. This is a technique that is utilized in a few SAN manufacturers. One of the vendors that does this is Compellent (now Dell/Compellent). They call this feature “Fast Track”. Compellent is a pioneer in the virtual storage arena trail blazing next generation storage for the enterprise. Another vendor that does this is Pillar.

So at the end of the day, getting back to that question, “What’s the difference between a Seagate 1TB drive and a Western Digital 1TB drive?” For me, my answer is still the same… but it is how the disks are intelligently managed and utilized by will ultimately make the difference.

Posted by yobitech on April 20, 2011 at 11:09 am under General, NAS, SAN, SAS, SCSI.

Comment on this post.

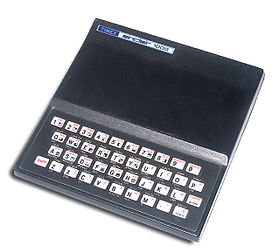

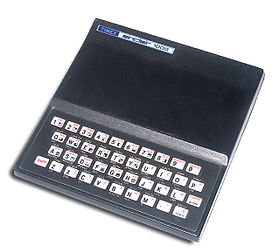

I remembered when I got my first computer. It was a Timex Sinclair.

Yes, the watch company and they made a computer. It had about 2k of RAM with a flimsy cellular buttoned keyboard. It had no hard drive or floppy disks, but it had a cassette tape player as the storage device. That’s right, the good old cassette tape. I bought a game on cassette tape with the computer back in the 80s and it took about 15 minutes to load the game. It was painful, but worth it.

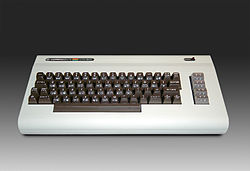

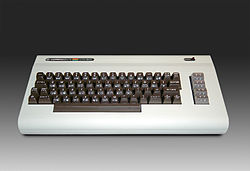

From there, I got a Commodore Vic20 with a 5.25” floppy drive. Wow, this was fast! From cassette to floppy disk seems like years ahead. I even used a hole-puncher to notch the side of the disk so I can use the flip side of the floppy. We didn’t have that many choices back then. Most games and applications loaded and ran right off of removable media.

I remember when hard drives first came out. It was very expensive and out of reach of the masses. A few years later, I saved up enough money to buy a 200MB hard drive by Western Digital. I paid $400 for that hard drive. It was worth every penny.

Since then, I was always impressed by how manufacturers were able to get more density on each disk every 6 months. Now with 3TB drives coming out soon, it never ceases to amaze me.

It is interesting how storage is going in 2 directions, Solid State Disk (SSD) and Magnetic Hard Disk (HD). If SSD is the next generation, then what does that mean for the HD? Will the HD disappear? Not for a while… This is because there are 2 factors: Cost and Application.

At the present time, a 120GB SSD is about the same cost as a 1TB drive. So it makes sense to go with a 1TB drive. Although this may be true from a capacity standpoint, the Application ultimately dictates what drive(s) are used to store the data. For example, although a 1TB drive may be able to store video for a video editor, the drive may be too slow for data to be processed. Same goes with databases where response time and latency is critical to the application.

Capacity simply will not cut it. This is when using multiple disks in a RAID (Redundant Array of Independent Disks) set will provide performance as well as capacity. SSDs can also be used because the access and response time of these drives are inherently faster.

As long as there is a price to capacity gap, the HD will be around during that time. So architecting storage today is a complex art for this dynamic world of applications.

I hope to use this blog as a forum to shed light on storage and where the industry is going.

Posted by yobitech on April 18, 2011 at 2:16 pm under Cloud, General, RAID, SAS, SCSI, SSD.

Comment on this post.

|